Since 2023–2025, a new generation of attacks has emerged, combining social engineering with AI-based voice cloning: vishing. In this context, a CEO’s voice is no longer a signal of trust. It has become a full-fledged attack vector, and the phenomenon is rapidly gaining momentum.

Advances in voice-cloning technology have dramatically lowered the technical barrier. Producing a usable voice imitation no longer requires advanced AI expertise, but rather the ability to create urgency and exploit authority.

Today, only a few seconds of audio can be enough to generate a credible synthetic voice. With several dozen seconds, the result can become convincing enough to deceive a professional interlocutor.

This raises a fundamental question: what is actually preventing the industrialisation of CEO vishing attacks?

In this article :

- An overview of the scale of the threat and its consequences

- Hackers’ Profile

- Their modus operandi in 3 steps

- Some protecting measures

The Scale of the Threat and Vishing Consequences

A documented acceleration

Voice-based fraud is growing faster than traditional email phishing. Multiple threat-intelligence reports converge on the same finding:

- According to threat intelligence analyses compiled by IBM and several European Security Operations Centers (SOCs), vishing attacks increased by more than 400% between 2023 and 2025, with a growing share involving highly realistic synthetic voices.

- The FBI, through its Internet Crime Complaint Center (IC3) reports, confirms a sustained rise in Business Email Compromise (BEC) fraud, with a significant portion now shifting to the voice channel, which is considered more effective at bypassing existing controls.

- In Europe, Europol classifies voice deepfakes among the major emerging cyber threats, particularly impacting SMEs and mid-sized enterprises that lack mature internal procedures and governance mechanisms.

Beyond financial loss

The impact rarely stops at a fraudulent transfer:

- Financial fraud: urgent wire transfers, bank detail changes, payment approvals.

- Identity compromise: MFA resets, disclosure of internal information.

- Organisational damage: erosion of internal trust, hesitation to act.

- Legal and reputational exposure: failure of internal controls and governance.

Documented cases:

In 2024, Mark Read, CEO of WPP—one of the world’s largest advertising and communications groups—was targeted in a sophisticated fraud attempt involving an AI-generated voice imitation combined with fake visual elements distributed via WhatsApp and Microsoft Teams.

The attack was aimed at convincing a senior executive to take action (financial transfer and/or business-related steps) by impersonating the CEO. The attempt was narrowly thwarted thanks to internal vigilance, but it illustrates that even top-tier executives are now targeted by deepfake scams combining synthetic voices and AI-generated visuals.

In January 2026, Switzerland, a Swiss entrepreneur was deceived into transferring several million Swiss francs following phone calls that used an AI-manipulated voice presented as that of a trusted business partner. The case is currently under investigation.

Who Are the Attackers?

Contrary to popular belief, these attacks are not primarily carried out by elite AI researchers.

Three profiles dominate:

- Organised financial crime groups

Already active in BEC schemes, they have integrated voice cloning as a low-cost, high-impact capability. - Tool-enabled opportunists

Individuals or small groups using commercial voice-cloning platforms with minimal technical skills. - Crime-as-a-service providers

Some actors now offer voice cloning as an on-demand service, mirroring phishing-as-a-service models.

Technical sophistication is no longer the limiting factor. Social and organisational scenarios make the difference.

How the Vishing Attack Works — in 3 steps

Step 1 — Voice collection

Common sources include:

- public videos, interviews, podcasts,

- conference recordings and webinars,

- internal voicemail messages,

- or short pretext calls designed to capture a few seconds of speech.

Today, 10 to 30 seconds of audio is often enough to create a usable clone.

Step 2 — Voice model creation

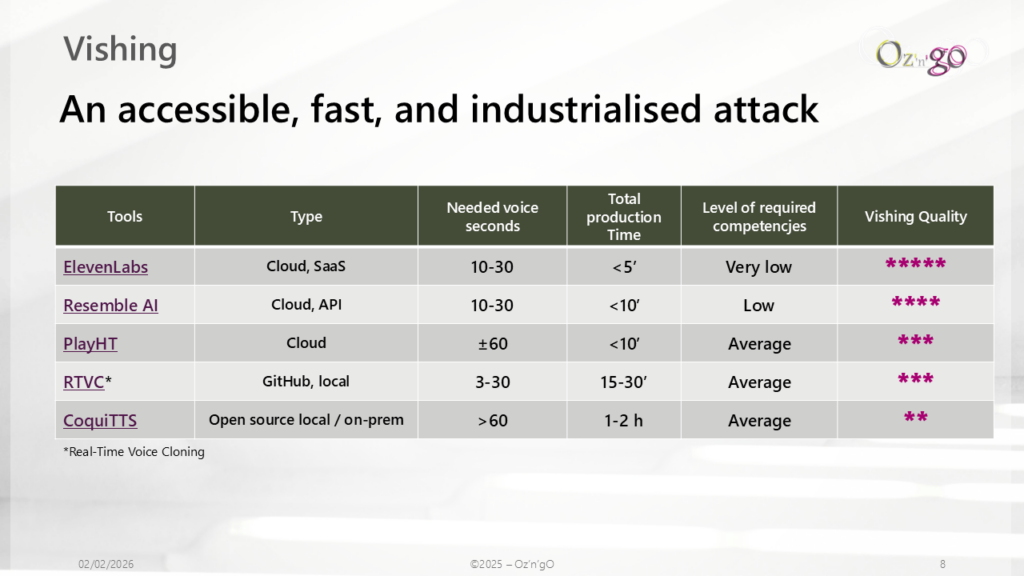

Attackers rely on either commercial cloud-based voice cloning platforms, or open-source tools run locally.

The process is broadly the same:

- extraction of a vocal “fingerprint” (timbre, rhythm, prosody),

- adaptation of a speech synthesis model,

- generation of a voice capable of reading arbitrary text convincingly.

Step 3 — The fraudulent call

Two main modes are used:

- Text-to-speech: pre-written scripts (orders, approvals, urgency).

- Near real-time voice conversion: interactive conversations.

Effectiveness depends less on perfect audio quality than on authority, urgency and context.

Why It Works

Human and organisational controls are poorly adapted:

- The human ear is not reliable when confronted with high-quality synthetic voices.

- Voice triggers automatic trust mechanisms, especially under pressure.

- Many organisations lack explicit rules defining what a CEO can — or cannot — request by phone.

As a result, voice is still treated as an implicit authentication factor, even though it no longer deserves that status.

How to Reduce the Vishing Risk

Organisational measures (critical)

- Formal prohibition of authorising sensitive actions based on voice alone.

- Mandatory out-of-band verification for exceptional requests.

- Clear rules on who can request what, through which channel.

- Non-negotiable delay for urgent financial transactions.

Human measures

- Targeted awareness for high-risk roles (finance, executive assistants, IT, HR).

- Scenario-based simulations focusing on decision-making, not blame.

Technical measures (supporting)

- Logging and traceability of sensitive requests.

- Monitoring of abnormal call patterns.

- Voice authentication may serve as an initial signal, but it must be combined with an independent additional measure for any sensitive action.